Archive for September, 2009

Bash/Perl tutorial

| Gabriel |

I’m a big fan of the idea of using Unix tools like Perl to script the cleaning of the massive text-based datasets that social scientists (especially sociologists of culture) often use. Unfortunately there’s something of a learning curve to this so even though I like the idea in principle and increasingly in practice, I still sometimes clean data interactively with TextWrangler and just try to keep good notes.

Fortunately two of my UC system colleagues have posted the course materials for a “Unix and Perl Primer for Biologists.” I’m about halfway through the materials and it’s great, in part because (unlike the llama) they assume no prior familiarity with programming or Unix. Although the examples involve genetics, it’s well-suited for social scientists as, like us, biologists are not computer scientists but are reasonably technically competent and they often deal with large text based data sets. Basically, if you can write a Stata do-file, you should be able to follow their course guide and if you use things like scalars and loops it should be pretty easy.

I highly recommend the course to any social scientist who deals with large dirty datasets, in other words, basically anyone who is a quant but doesn’t just download clean ICPSR or Census data. This is especially relevant for anyone who wants to scrape data off the web, use IMDB, do large-scale content analysis, etc.

Some notes:

- They assume you will a) be running the materials off a stick and b) using Mac OS X. If you’re keeping the material on the hard drive, get used to typing “chmod u+x foo.pl” to make the perl script “foo” executable. (This step is unnecessary for files on a stick because unlike HFS+ or EXT3, the FAT filesystem doesn’t do permissions). If you’re using a different version of Unix, most of it should work similarly with only a few minor differences, such as that you’ll want to use Kate instead of Smultron and on a Mac a USB stick is in /Volumes/ whereas in Linux it’s in /Media/ and in BSD it’s in /mnt/. If you’re using Windows you’ll either need to a) install CygWin b) install a virtual machine c) run off a live cd or bootable stick or d) dual boot with Wubi.

- If you’re really used to Stata, some of the nomenclature may seem backwards, mostly because Perl doesn’t keep a dataset in memory but processes it on disk, one command at a time. So, in Perl and Bash a “variable” is the equivalent to what Stata calls a (global or local) “macro”. The closest Perl equivalent to what Stata calls a “variable” would be a “field” in a tab-delimited text file.

[Update: Although they suggest Smultron, I find TextMate works even better as it can execute scripts entirely within the editor, so you don’t have to constantly cmd-tab to Terminal.app and back.]

I drink your agency!

| Gabriel |

A few weeks ago I read (well, listened to an audiobook of) Upton Sinclair’s Oil! and was both impressed and surprised by it.

First, although a few scenes are almost identical, overall the book is very different from There Will Be Blood, although both are well worth reading/watching. The central character in the book is the son, not the father. The father (J. A. Ross in the book) is a basically sympathetic character completely lacking the seething misanthropy that characterized the father (Daniel Plainview) in the movie. In the book Paul is a central character whereas Eli is a footnote, the opposite of the movie where Eli is important and Paul is almost a phantasm.

Second, all of these changes in character and plot are directly related to the change in theme. The film is all about the clash between Wirtschaft und Gemeinschaft as personified in a decades long fight between a miserable bastard of an entrepreneur and a miserable bastard of a preacher. So Daniel Plainview flat out says “I hate most people” and he means it. In contrast, the book is about the clash between capital and labor and is manifested despite the good will of all the major characters. J. A. Ross the entrepreneur and Paul Watkins the proletarian are both sympathetic characters and in fact are friends with each other. Their opposition is not personal, but political, and these political differences are driven pretty directly by opposing material interests. In the book, as in Marx, class cohesion is emergent from the structure of economic relations and not reliant on the personal ideologies of the various individuals involved. Consistent with this highly structural approach was making the protagonist the son, Bunny, instead of the father. Bunny is a fairly passive character who develops very gradually and the only break with structural determinism in the book is that Bunny slowly rejects his father’s ambition that he continue in the oil business and instead founds a socialist newspaper and later, college.

I thought this choice of making the action occur despite the character’s inner motivation was both in keeping with the semi-Marxist tone of the book and surprisingly effective as a dramatic device. Of course when you recall that the book came first, the question is not just why did Sinclair write a materialist dialect novel, but why, in the course of adapting it, did Anderson make it more conventional by emphasizing agency and personality? I have to think that the answer is that despite the careful wardrobe and set decoration to evoke pre-war California, the movie is thematically very much of our era. By era, I don’t mean “post-1989 when socialism was dead” (although that too) but even more importantly the post-1970 era. In politics this means the new social movements who changed the focus of politics from social class and redistribution to identity and self-expression, even while the Berlin Wall was still standing. In art in general and film in particular this means to be deep it must be dark, with The Godfather and Taxi Driver being the paradigmatic cases. To the contemporary ear, a film in which a basically decent capitalist is driven to corrupt the state and exploit workers by the impersonal dynamics of class struggle, might as well be written in Sanskrit. Much better to have a nihilistic anti-hero as opposed by a fundamentalist charlatan, both of them driven entirely by their internal character.

A few tangential notes on the book:

- All the “I’ve seen the future and it works” stuff about Russia is cringe-inducing in retrospect, as is the not too subtle consistent description of the Bolsheviks as “working men” which is meant to imply that they weren’t combatants at all, but civilians, and thus all violence done to their faction during the Russian civil war was a war crime (even as the violence they did to other factions is regrettable, but understandable).

- While the book is very deliberately and explicitly to the far left on economics, it’s interesting how in many ways the book is, by today’s standards, extremely culturally reactionary in a more taken-for-granted kind of way. There are many references to various liberties and vices, all of which are used as examples of upper class decadence. This is clearest in the case of the three (count em) rich women not only sleep around, but justify their behavior with elaborate self-serving treatises on free love. (In contrast, the only romantic relationship between two leftists is only consummated within marriage). Likewise, the description of an election night victory party (for the candidate bought by the oil men) opens with an essay on jazz that is, ahem, racially-insensitive. I take this as an example of how hard it is to project today’s political alignments into the past.

- You have to love a book that describes sociology as “an elaborate structure of classifications, wholly artificial, devised by learned gentlemen in search of something to be learned about.”

- Between the socialist politics of the book, his lengthy satire of the lives and works of Hollywood (Ross’ business partner is a satire of W R Hearst and Bunny dates an actress), and the inclusion of the Eli character as a (tangential) satire of Aimee Semperson McPhee, you can see why the Hollywood moguls and McPhee worked so strenuously to oppose Sinclair’s run for governor of California a few years after the book was published. This gubernatorial run had an important place in Hollywood history in that it set the ground work for the radicalization of the WGA, and by further extension, the blacklist.

Rate vs raw number

| Gabriel |

Two things I read recently spoke to my sense of why it doesn’t make sense to talk about raw numbers but only rates.

One is the observation that “from 2004 to 2007 more people left California for Texas and Oklahoma than came west from those states to escape the Dust Bowl in the 1930s.” I’m not going to claim that the article has it wrong and California is in fantastic shape, but this is a very misleading figure. (Even though it’s pretty funny to imagine a latter day Tom Joad loading up the aroma therapy kit into his Prius and heading east in search of work and low taxes). Let’s put aside that it’s using out-migration when net migration is a much better measure of the “voting with your feet” effect. This “worse than the dust bowl” factoid ignores that the present population of California is four times bigger than the combined population of Texas and Oklahoma in 1930, so as a rate the gross outflow is much less than that of the Dust Bowl Okies. (On the other hand the Okies largely came to California whereas many native born Californians are moving to Arizona, Nevada, Oregon, and Washington, not just OK+TX). Anyway, the dust bowl comparison strikes me as a cute but meaningless figure, which shouldn’t be necessary given that the state’s problems are severe enough that you don’t need half-truths to describe them.

The second thing I read was this fascinating article on the public health debate over salt. (long story short, the case for salt intake restrictions was always weak but has been growing weaker, nonetheless the hyper risk averse killjoys of the public health community favor maintaining the low recommended daily allowance just in case). The most interesting thing to me was this passage:

The controversy itself remains potent because even a small benefit–one clinically meaningless to any single patient–might have a major public health impact. This is a principal tenet of public health: Small effects can have important consequences over entire populations. If by eating less salt, the world’s population reduced its average blood pressure by a single millimeter of mercury, says Oxford University epidemiologist Richard Peto, that would prevent several hundred thousand deaths a year

That is, we should take even infinitesimal rates seriously if they are applied to a large population. If you take this logic to its natural conclusion it implies that in ginormous countries like China and India the speed limit should be 40 miles an hour and they should put statins in the drinking water, but in tiny countries like New Zealand or Belize people should drive like the devil is after them and open another bag of pork rinds. Or if you take a more cosmopolitan perspective, you could say that we should further restrict our salt intake until some time around 2075 (the UN’s best estimate for the peak world population) and after that we can start having the occasional french fry again.

I guess I shouldn’t be too harsh on this kind of thing since as someone who sometimes deals with very large datasets, it is in my interests for peer reviewers to pay attention to my awesome p-values (look Ma, three stars!) and ignore that in some instances these p-values are attached to betas that aren’t substantively or theoretically significant, but benefit from practically infinite N driving standard error practically to zero.

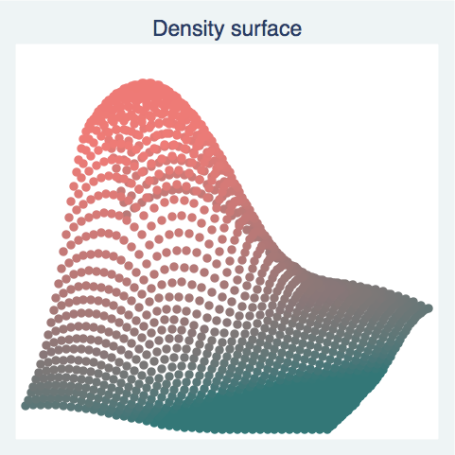

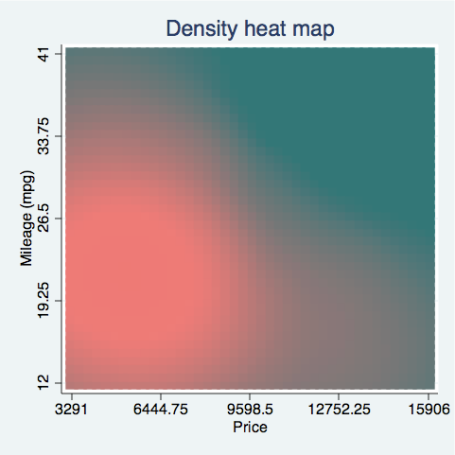

Surface / contour graphs with tddens.ado

| Gabriel |

I have previously complained about the lack of surface / contour graphs in Stata, first providing native code for some thoroughly fug graphs, then code that pipes to gnuplot. The latter solution produces nice output but is a) a pain to install on Mac or Windows and b) doesn’t adhere to Stata’s graph conventions.

I was actually considering cleaning up my gnuplot pipe and submitting it to SSC, but no longer, for there is a new ado file by Austin Nichols that does this natively and produces absolutely gorgeous graphs. The only advantage of piping things to gnuplot is that gnuplot is faster, but I think the advantages of doing everything natively within Stata make the extra couple seconds well worth it.

Fire up your copy of Stata and type

ssc install tddens

Here’s some sample output:

On behalf of Stata users everywhere, thank you Dr. Nichols.

They never did this on Mad Men

| Gabriel |

DDB (the world’s biggest ad agency) is pretty pissed off at its Brazilian office right now. Recently an unsolicited spec ad “for” the World Wildlife Fund showed up in which an entire squadron of commercial jet liners are aimed squarely at the Manhattan skyline as it appeared at 8:45am on 9/11/01 (although in the ad the sky is overcast). AdAge describes it as:

The description of the ad submitted by the agency said “We see two airplanes blowing up the WTC’s Twin Towers…lettering reminds us that the tsunami killed 100 times more people. The film asks us to respect a planet that is brutally powerful.”

Note that this is not just morally odious (at least to Americans both in and out of the ad industry — apparently foreign ad men and ad prize judges don’t feel this as uniformly as we do) but scientifically illiterate as tsunamis aren’t plausibly connected to human activity. (The ad seems to be confusing them with hurricanes, which are plausibly connected to global warming).

Once the ad became notorious in the ad world, various people tried to track down its provenance, with the Brazilian trade magazine Meio & Mensagem finding old entry records for advertising creative competitions showing it came from DDB Brasil, which at that point ‘fessed up. Needless to say, neither the WWF nor the DDB parent company are happy about this and the responsible team at DDB Brasil was fired. To me the whole thing is best summed up in an AdAge op-ed that sees this ad as the extreme manifestation of creative run amuck in search of prestige and expression, rather than an old-fashioned sell.

Creative directors are entirely to blame for this state of affairs. The main problem is that most of them got where they are today by, you guessed it, winning creative awards. And guess the No. 1 target they’re driving — and I mean driving — their teams to achieve.

This scandal, and the attribution of the malfeasance to the awards mentality, reminded me of some interesting work lately on how prizes can shape fields. (See the bottom of the post for cites).

In advertising specifically you see a real conflict between ad people who see themselves as basically artists and those who see themselves as salesmen. The former are obviously more aligned with the awards mentality, but the latter have the “effies” (for “effective,” as compared to self-indulgent, marketing). Anyway, as seen in this little case study, some ad agencies are:

- interested in shock value that will attract the attention of prize juries but alienate many consumers

- so desperate to win awards that they will create spec ads without the knowledge or consent of the putative client, arrange to have them published, and then submit them in the competition.

Cites for awards literature:

- Anand, N. and BC. Jones. 2008. “Tournament rituals, category dynamics, and field configuration: The case of the Booker Prize.” Journal of Management Studies 45:1036-1060.

- Anand, N and Mary R Watson. 2004. “Tournament Rituals in the Evolution of Fields: The Case of the Grammy Awards.” Academy of Management Journal 47:59-80.

- English, James. 2005. The Economy of Prestige: Prizes, Awards, and the Circulation of Cultural Value. Cambridge Mass.: Harvard University Press

- Frey, Bruno S. and Susanne Neckermann. 2008. “Awards: A View from Psychological Economics“. University of Zurich Institute for Empirical Research in Economics Working Paper No. 357.

[Update: for a much more pleasant PSA story, see Jay’s post on “Don’t Mess With Texas.”

Life imitates SPQ

| Gabriel |

Marketing companies are offering services to let advertisers (or really pathetically needy ordinary end users) inflate their apparent popularity. This of course was the gist of the second-half of the Salganik and Watts “Music Lab” experiments, in which the researchers flipped the download count (so popular songs appeared unpopular and vice versa) then watched if anyone could tell the difference.

googling “stata”

| Gabriel |

As you may have noticed, google sometimes seems to find it very difficult to believe that anyone intended to google things about “stata” when there are so many totally awesome pages out there about “state.” For instance, if you google “stata opencl” or “stata escape,” most of the results include “state” but not “stata.” Unfortunately there appears to be no “don’t correct my spelling” option but you can tell it to exclude things with the word “state” in it by adding “–state” to the end of your query. So searching for “stata opencl –state” gives better results.

Dry, with hints of stone fruit, and a subtle aftertaste of the null hypothesis

| Gabriel |

The number of gold medals won by different wines at competition follows a binomial but you’d expect this under the null. In fact, it appears that wins are totally random and the various competitions are not indicators loading on a latent “quality” variable. I’m trying to figure out if this makes me take Benjamin and Podolny more or less seriously. I’m thinking more, since if quality isn’t an issue that pretty much eliminates any question about spuriousness. In related news, The New Yorker had an interesting article about two buck chuck a few months ago.

via O&M

Weighting, omitted variable bias, and interaction effects

| Gabriel |

Everyone agrees that weights can be necessary for cross-tabs but you sometimes hear arguments that they are unnecessary for regression if you just control for whatever you were using to calculate the weights. This is true if you are concerned about different intercepts, but doesn’t do anything if you are concerned about different slopes. Of course you could specify these different slopes as interaction effects but:

- these interactions may not occur to you

- you’re talking about a majorly cluttered model

Here’s a simple illustration of how it works. Whites make more than blacks and men make more than women. However the income difference between black women and black men is much smaller than the gender gap among whites. One way to put this is that “black” and “female” have negative main effects but a positive interaction. Another way to put this is that the effect of gender on income has a different slope for whites and for blacks.

Nonetheless it may be meaningful to think of a grand slope for the whole population either by default because it hasn’t occurred to you to check for an interaction or because you think it’s more theoretically parsimonious to omit it. The grand slope should be a compromise between the (steep) slope for whites and the (shallow) slope for blacks but since there are more whites than blacks it should be closer to the white slope. However if you have an oversample of blacks, your estimate of the grand slope will be too close to that for blacks, even if you control for race. That is, if you’re worried about intercepts then controls are similar to weighting, but if you are worried about slopes then (additive) controls don’t do you any good.

Here’s a demonstration:

*create a population consisting that is 10% black and (orthogonally) 50% female

clear

eststo clear

set obs 10000

gen black=0

replace black=1 in 1/1000

gen female=mod([_n],2)

gen whiteman=0

replace whiteman=1 if black==0 & female==0

*create "income" where white women, black men, and black women make the same amount of money

* and white men make more

*should be poisson, but for simplicity make it normal

gen income=rnormal()

replace income=income+whiteman

*white men are +1 sigma on income relative to the other three categories (which are equal to each other)

*confirmed in this table, where most groups are at about (standardized) zero and white men at about 1

table black female, c(m income)

eststo: regress income black female

eststo: regress income black female whiteman

*these are the true effects for the population (90% white, 10% black)

*imagine doing a survey n=1000 with a stratified random sample oversampling blacks

gen sample=0

gen stratrandomsample=runiform()

sort black stratrandomsample

replace sample=1 in 1/500 /*sample 500 whites (out of 9000 in pop)*/

gsort -black stratrandomsample

replace sample=1 in 1/500 /*sample 500 blacks (out of 1000 in pop)*/

*create pweights

gen pweight=.

replace pweight=2 if black==1 & sample==1 /* 2=1000/500 or black population: black sample */

replace pweight=18 if black==0 & sample==1 /* 18=9000/500 or white population: white sample */

eststo: regress income black female if sample==1

eststo: regress income black female whiteman if sample==1

eststo: regress income black female [pweight=pweight] if sample==1

esttab , se mtitles("Population" "Population" "Unweighted" "Unweighted" "Weighted")

* 1. Population, gender only

* 2. Population, fully specified

* 3. Unweighted sample, gender only

* 4. Unweighted sample, fully specified

* 5. Weighted sample, gender only

* Note similarity of #1 and #5 and of #2 and #4. Contrast with #3

*have a nice day

Why I’m not upgrading to Snow Leopard (yet)

[Update 10/28/09, I’ve upgraded to Snow Leopard and Lyx works fine if, as a commenter suggested, you “get info” then click the “Rosetta” option. I’ve had no other issues with Snow Leopard and can recommend it, even for people who use Lyx a lot].

[Update 12/7/09, The current version of Lyx works fine running as Intel native].

| Gabriel |

Snow Leopard was primarily aimed at cutting out the kruft and both speeding up key apps (e.g., a much faster Finder and Mail client) and providing opportunities for application developers to get even more juice out of it in the future (e.g., Grand Central and OpenCL). I think it’s totally laudable that Apple (and for that matter, Microsoft with Windows 7) is taking a step back from the relentless march of bloatware to emphasize core performance issues and take advantage of new hardware architecture (esp. multicore and big GPUs). Unfortunately this low-level stuff can create some incompatibilities, especially if there is a debate between the OS and app developer over API standards or the app developer just decided to ignore API standards.

The issue that kills it for me is that Lyx is unstable under OS X 10.6. So my choices are to a) give up Lyx and write raw LaTex b) run Lyx in a Ubuntu virtual machine or c) hold off on upgrading to Snow Leopard until Lyx gets patched, which should take a few months because this is low-level stuff and their team doesn’t have many Mac people. (Note that I’m not complaining, the Lyx team is generally very good about providing up-to-date Mac binaries rather than the usual thing of just directing you to the gruesome twosome of Fink+X11). Although it breaks my geeky heart, the obvious answer is “c, stick with 10.5 for now.” I’d consider the virtual machine work-around for something I use once in awhile, but I use Lyx several hours a day and that’s just too much hassle / performance hit to deal with.

Fortunately Stata (10 and 11), TextWrangler, TextMate, Smultron, R, and Crossover are all reported to work perfectly. If you don’t use Lyx (or anything else that’s buggy under 10.6, like SPSS 17), it’s probably a good idea to upgrade as it looks like a great version of the OS. However if it would break your favorite app, just wait it out. This especially makes sense given that all you’re getting right now is fairly small things like a faster Finder, better VPN+Entourage support, and snazzier QuickTime — the really amazing features of 10.6 (OpenCL+GrandCentral) won’t actually do anything until application developers rewrite their code to take advantage of them.

Recent Comments