Archive for November, 2015

Everything I Needed to Know (About Publication Bias), I Learned In (Pre-) Kindergarten

| Gabriel |

There has been a tremendous amount of hype over the last few years about universal pre-K as a magic bullet to solve all social problems. We see a lot of talk of return on investment at rates usually only promised by prosperity gospel preachers and Ponzi schemes. Unfortunately, two recent large-scale studies, one in Quebec and one in Tennessee, showed small negative effects for pre-K. An article writing up the Tennessee study in New York advises fear not, for:

These are all good studies, and they raise important questions. But none of them is an indictment of preschool, exactly, so much as an indictment of particular approaches to it. How do we know that? Two landmark studies, first published in 1993 and 2008, demonstrate definitively that, if done right, state-sponsored pre-K can have profound, lasting, and positive effects — on individuals and on a community.

It then goes on to explain that the Perry and Abecedarian projects were studies involving 123 and 100 people respectively, had marvelous outcomes, and were play rather than drill oriented.

The phrase “demonstrate definitively” is the kind of phrase you have to very careful with and it just looks silly to say that this definitive knowledge comes from two studies with sample size of about a hundred. Tiny studies with absurdly large effects sizes are exactly where you would expect to find publication bias. Indeed, this is almost inevitable when the sample sizes are so underpowered that the only way to get β/se>1.96 is for β to be implausibly large. (As Jeremy Freese observed, this is among the dozen or so major problems with the PNAS himmicane study).

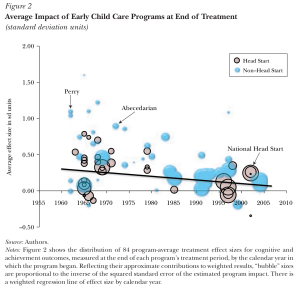

The standard way to detect publication bias is through a meta-analysis showing that small studies have big effects and big studies have small effects. For instance, this is what Card and Krueger showed in a meta-analysis of the minimum wage literature which demonstrated that their previous paper on PA/NJ was only an outlier when you didn’t account for publication bias. Similarly, in a 2013 JEP, Duncan and Magnuson do a meta-analysis of the pre-K literature. Their visualization in figure 2 emphasizes the declining effects sizes over time, but you can also see that the large studies (shown as large circles) generally have much smaller β than the small studies (shown as small circles). If we added the Tennessee and Quebec studies to this plot they would be large circles on the right slightly below the x-axis. That is to say, they would fall right on the regression line and might even pull it down further.

This is what publication bias looks like: old small studies have big effects and new large studies have small effects.

I suppose it’s possible that the reason Perry and Abecedarian showed big results is because the programs were better implemented than those in the newer studies, but this is not “demonstrated definitively” and given the strong evidence that it’s all publication bias, let’s tentatively assume that if something’s too good to be true (such as that a few hours a week can almost deterministically make kids stay in school, earn a solid living, and stay out of jail), then it ain’t.

Recent Comments