Posts tagged ‘shell’

Now These Are the Names, Pt 1

| Gabriel |

There’s a lot of great research on names and I’ve been a big fan of it for years, although it’s hard to commensurate with my own favorite diffusion models since names are a flow whereas the stuff I’m interested in generally concern diffusion across a finite population.

Anyway, I was inspired to play with this data by two things in conversation. The one I’ll discuss today is somebody repeated a story about a girl named “Lah-d,” which is pronounced “La dash da” since “the dash is not silent.”

This appears to be a slight variation on an existing apocryphal story, but it reflects three real social facts that are well documented in the name literature. First, black girls have the most eclectic names of any demographic group, with a high premium put on on creativity and about 30% having unique names. Second, even when their names are unique coinages they still follow systematic rules, as with the characteristic prefix “La” and consonant pair “sh.” Third, these distinctly black names are an object of bewildered mockery (and a basis for exclusion) by others, which is the appeal in retelling this and other urban legends on the same theme.*

To tell if there was any evidence for this story I checked the Social Security data, but the web searchable interface only includes the top 1000 names per year. Thus checking on very rare names requires downloading the raw text files. There’s one file per year, but you can efficiently search all of them from the command line by going to the directory where you unzipped the archive and grepping.

cd ~/Downloads/names grep '^Lah-d' *.txt grep '^Lahd' *.txt

As you can see, this name does not appear anywhere in the data. Case closed? Well, there’s a slight caveat in that for privacy reasons the data only include names that occur at least five times in a given birth year. So while it includes rare names, it misses extremely rare names. For instance, you also get a big fat nothing if you do this search:

grep '^Reihan' *.txt

This despite the fact that I personally know an American named Reihan. (Actually I’ve never asked him to show me a photo ID so I should remain open to the possibility that “Reihan Salam” is just a memorable nom de plume and his birth certificate really says “Jason Miller” or “Brian Davis”).

For names that do meet the minimal threshold though you can use grep as the basis for a quick and dirty time series. To automate this I wrote a little Stata script to do this called grepnames. To call it, you give it two arguments, the (case-sensitive) name you’re looking for and the directory where you put the name files. It gives you back a time-series for how many births had that name.

capture program drop grepnames program define grepnames local name "`1'" local directory "`2'" tempfile namequery shell grep -r '^`name'' "`directory'" > `namequery' insheet using `namequery', clear gen year=real(regexs(1)) if regexm(v1,"`directory'yob([12][0-9][0-9][0-9])\.txt") gen name=regexs(1) if regexm(v1,"`directory'yob[12][0-9][0-9][0-9]\.txt:(.+)") keep if name=="`name'" ren v3 frequency ren v2 sex fillin sex year recode frequency .=0 sort year sex twoway (line frequency year if sex=="M") (line frequency year if sex=="F"), legend(order(1 "Male" 2 "Female")) title(`"Time Series for "`name'" by Birth Cohort"') end

For instance:

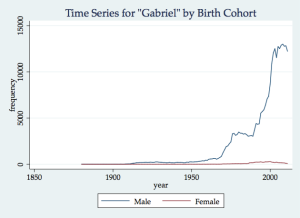

grepnames Gabriel "/Users/rossman/Documents/codeandculture/names/"

Note that these numbers are not scaled for the size of the cohorts, either in reality or as observed by the Social Security administration. (Their data is noticeably worse for cohorts prior to about 1920). Still, it’s pretty obvious that my first name has grown more popular over time.

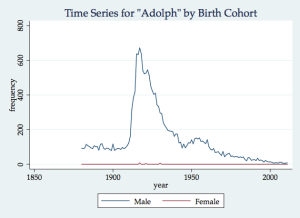

We can also replicate a classic example from Lieberson of a name that became less popular over time, for rather obvious reasons.

grepnames Adolph "/Users/rossman/Documents/codeandculture/names/"

Next time, how diverse are names over time with thoughts on entropy indices.

(Also see Jay’s thoughts on names, as well as taking inspiration from my book to apply Bass models to film box office).

* Yes, I know that one of those stories is true but the interesting thing is that people like to retell it (and do so with mocking commentary), not that the underlying incident is true. It is also true that yesterday I had eggs and coffee for breakfast, but nobody is likely to forward an e-mail to their friends repeating that particular banal but accurate nugget.

Which of my cites is missing?

| Gabriel |

I was working on my book (in Lyx) and it drove me crazy that at the top of the bibliography was a missing citation. Finding the referent to this missing citation manually was easier said than done and ultimately I gave up and had the computer do it. These suggestions are provided rather inelegantly as a “log” spread across two languages. However you could pretty easily work them into an argument-passing script written in just one language. Likewise, it should be easy to modify them for use with plain vanilla LaTeX if need be.

First, I pulled all the citations from the book manuscript and all the keys from my Bibtex files.

grep '^key ' book.lyx | sort | uniq -u | perl -pe 's/^key "([^"]+)"/$1/' > cites.txt

grep '^\@' ~/Documents/latexfiles/ghrcites_manual.bib | perl -pe 's/\@.+{(.+),/$1/' > bibclean.txt

grep '^\@' ~/Documents/latexfiles/ghrcites_zotero.bib | perl -pe 's/\@.+{(.+),/$1/' >> bibclean.txt

Then in Stata I merged the two files and looked for Bibtex keys that appear in the manuscript but not the Bibtex files. [Update, see the comments for a better way to do this.] From that point it was easy to add the citations to the Bibtex files (or correct the spelling of the keys in the manuscript).

insheet using bibclean.txt, clear tempfile x save `x' insheet using cites.txt, clear merge 1:1 v1 using `x' list if _merge==1

A Cloud-Hating Curmudgeon’s Unofficial Manual for a Grid-less Workflow on UCLA’s Hoffman2 Cluster

| Gabriel |

A couple years ago UCLA’s pop center migrated our statistical computing from our own server to the university’s Hoffman2 cluster. When this happened I tried out the cluster and hated the recommended “Grid” browser-based GUI, with the single biggest aggravation being that it requires you to transfer files one at a time through a clunky upload/download wizard. As such, I paid for my own Stata MP license (which even as part of a lab volume purchase wasn’t cheap) and since the migration I’ve just done all my statistics locally on my MacBook.

I’ve recently given Hoffman2 another try and realized that I can just ignore “Grid” and do my regular workflow when dealing with a server:

- write code with a good local text editor (preferably one that is SFTP compatible)

- sync scripts, data, and output between the local and remote file systems with an SFTP client

- batch jobs on the server through SSH

- (as a last resort) run GUI apps through X11

Pretty plain vanilla stuff but it’s actually much simpler in practice than a (broken) browser-based GUI.

Now that I’ve gotten this worked out I’m a big fan of Hoffman2 for big jobs because it’s extremely fast. For instance, a simulation that takes Stata MP about seven hours on my MacBook took just an hour and twenty minutes on Hoffman2. As such I’m writing up some notes on how I use it, in part so I remember and in part so I can recommend the cluster to colleagues and students.

File management. Use a dedicated FTP client like Filezilla or Cyberduck. (For some reason the Finder/Pathfinder “Connect to Server” command doesn’t work with Hoffman2). The connection type should be “SFTP”. The URL is “hoffman2.idre.ucla.edu”. Your name and password are the same logins you use for an SSH terminal session (or as the documentation calls it, a “node” session). Use your FTP client to upload data and scripts (which you will probably write locally on a text editor) and download output. Here’s what my configuration window looks like in Cyberduck.

Coding. Either do this locally and sync it through SFTP (see above) or use a text editor with integrated SFTP. On a Mac, TextWrangler/BBEdit has great SFTP support (in addition to other notable features such as really good regular expressions support and Stata syntax highlighting). I can also recommend the cross-platform program Komodo Edit. Or if you’re into that sort of thing you can use Vim or emacs through SSH.

Connecting to SSH. Open your “Terminal” (on Mac/Linux) or an SSH client (on Windows). Type “ssh hoffman2.idre.ucla.edu”. If it didn’t guess your username correctly you need to write “ssh hoffman2.idre.ucla.edu -l username“. You now have a bash session. You can do all the usual stuff, but mostly you’re just going to batch jobs.

Batching a Job. If you just want to put a job in the queue you simply type “program.q script“. For instance, to do the Stata script “foo.do” you’d make sure you’re in the right directory and type:

stata.q foo.do

The documentation makes it sound much more complicated than this, but 9 times out of 10 that’s all you need to do. The system will email you when your job starts and finishes and you can use SFTP to retrieve the output and log. However if you want to kill a job or something, you just type program.q without arguments and then follow the instructions.

Importing your Stata ado-files

Unlike R (where you have to put “library()” at the start of your source files), Stata’s use of libraries is so transparent that you can forget they’re not part of the stock Stata installation. (My first batch crashed twice because I forgot to install some of my commands). On your own computer, remind yourself what ado-files you have installed with these Stata commands.

disp "`c(sysdir_plus)'" disp "`c(sysdir_personal)'"

On a Mac, both of these folders are in “~/Library/Application Support/Stata/ado”

Once you remember what ado-files you want, write yourself a do-file that will install them and batch it. For instance, I did:

ssc install fs ssc install fsx ssc install gllamm ssc install estout ssc install stata2pajek ssc install shufflevar

The ado files go in “~/ado” which has the practical upshot that you don’t need admin permission to install them and they persist between sessions.

Interactive GUI Usage. Do it on your own computer. If that’s not possible (perhaps because you don’t have a personal license for a particular piece of software) use X11 rather than Grid. When I experimented with Grid’s browser-based VNC session it took forever to load the Java Virtual Machine, it refreshed at about 10 frames per second, and worst of all it wouldn’t capture keyboard input.

The results are much better if you use a real X11 client rather than Grid’s JVM. To do this you first connect through X11 (in Mac this means using X11.app rather than the Terminal.app) and add the flag “-X” to your ssh session (eg, “ssh hoffman2.idre.ucla.edu -l rossman -X”). As always you can test it with “xeyes” command. You then type “xstata” and follow the instructions carefully. (It bounces you to an interactive node and makes you type back a fairly lengthy command to actually launch the session). It’s a pretty fair amount of work to get an X11 session but unlike the browser version it is useable. (For more instructions on X11 sessions for Stata and other software see the links labeled “How to run on ATS-Hosted Clusters” in this table). Try to avoid this though as it’s faster and less work to just script and batch it with the “stata.q” command described above.

Finally, if you just want an interactive command-line session you can use ssh and issue the “qrsh” command. This actually works really well. Remember that you don’t need to see a graph to make a graph but can use the “graph export” command in Stata and the “pdf()” function in R to write graphs to disc and then retrieve them through your SFTP client.

OS X memory clean up w/o reboot

| Gabriel |

Windows XP had a notorious bug (which I think has been fixed in Vista/7) that it didn’t allocate memory very well and you had to restart every once in awhile. Turns out OS X has a comparable problem. Via the Atlantic I see a trick for releasing the memory.

Basically, you do a “du” query and this tricks the computer into tidying things up. This takes about fifteen minutes and implies a noticeable performance hit but after that it works much better. Ideally, you run it as root and the Atlantic suggests a one-liner that uses sudo, but keeping your root password in plain text strikes me as a really bad idea. Rather I see three ways to do it.

- Run it as a regular user and accept that it won’t work as well. The command is just

du -sx /

and you can enter it from the Terminal, cron it, or save it as an Automator service.

- Do it interactively as root, which requires this code (enter your password when prompted).

sudo du -sx /

- Cron it as root. Here’s how to get to root’s cron table

sudo -i crontab -e

Once in the cron table just add the same

du -sx /

command as before, preferably scheduled for a time when your computer is likely to be turned on but not doing anything intensive (maybe lunch time).

Scraping Twitter

| Gabriel |

I recently got interested in a set of communities that have a big Twitter presence and so I wrote some code to collect it. I started out creating OPML bookmark files which I can give to RSSOwl,* but ultimately decided to do everything in wget so I can cron it on my server. Nonetheless, I’m including the code for creating OPML in case people are interested.

Anyway, here’s the directory structure for the project:

project/ a/ b/ c/ lists/

I have a list of Twitter web URLs for each community that I store in “projects/lists” and call “twitterlist_a.txt”, “twitterlist_b.txt”, etc. I collected these lists by hand but if there’s a directory listing on the web you could parse it to get the URLs. Each of these files is just a list and looks like this:

http://twitter.com/adage http://twitter.com/science

I then run these lists through a Bash script, called “twitterscrape.sh” which is run from “project/” and takes the name of the community as an argument. It collects the Twitter page from each URL and extracts the RSS feed and “Web” link for each. It gives the RSS feeds to an OPML file, which is an XML file that RSS readers treat as a bookmark list and to a plain text file. The script also finds the “Web” link in the Twitter feeds and saves them as a file suitable for later use with wget.

#!/bin/bash #twitterscrape.sh #take list of twitter feeds #extract rss feed links and convert to OPML (XML feed list) format #extract weblinks #get current first page of twitter feeds cd $1 wget -N --input-file=../lists/twitterlist_$1.txt cd .. #parse feeds for RSS, gen opml file (for use with RSS readers like RSSOwl) echo -e "<?xml version\"1.0\" encoding=\"UTF-8\"?>\n<opml version=\"1.0\">\n\t<head>\n\t<body>" > lists/twitrss_$1.opml grep -r 'xref rss favorites' ./$1 | perl -pe 's/.+\/(.+): .+href="\/favorites\/(.+)" class.+\n/\t\t<outline text="$1" type="rss" xmlUrl="http:\/\/twitter.com\/statuses\/user_timeline\/$2"\/>\n/' >> lists/twitrss_$1.opml echo -e "\t</body>\n</opml>\n" >> lists/twitrss_$1.opml #make simple text list out of OPML (for use w wget) grep 'http\:\/' lists/twitrss_$1.opml | perl -pe 's/\s+\<outline .+(http.+\.rss).+$/\1/' > lists/twitrss_$1.txt #parse Twitter feeds for link to real websites (for use w wget) grep -h -r '>Web</span>' ./$1 | perl -pe 's/.+href="(.+)" class.+\n/$1\n/' > lists/web_$1.txt echo -e "\nIf using GUI RSS, please remember to import the OPML feed into RSSOwl or Thunderbird\nIf cronning, set up twitterscrape_daily.sh\n" #have a nice day

This basically gives you a list of RSS feeds (in both OPML and TXT), but you still need to scrape them daily (or however often). If you’re using RSSOwl, “import” the OPML file. I started by doing this, but decided to cron it instead with two scripts.

The script twitterscrape_daily.sh collects the RSS files, calls the perl script to do some of the cleaning, combines the cleaned information into a cumulative file, and then deletes the temp files. Note that Twitter only lets you get 150 RSS feeds within a short amount of time — any more and it cuts you off. As such you’ll want to stagger the cron jobs. To see whether you’re running into trouble, the file project/masterlog.txt counts how many “400 Error” messages turn up per run. Usually these are Twitter turning you down because you’ve already collected a lot of data in a short amount of time. If you get this a lot, try splitting a large community in half and/or spacing out your crons a bit more and/or changing your IP address.

#!/bin/bash #twitterscrape_daily.sh #collect twitter feeds, reshape from individual rss/html files into single tab-delimited text file DATESTAMP=`date '+%Y%m%d'` PARENTPATH=~/project TEMPDIR=$PARENTPATH/$1/$DATESTAMP #get current first page of twitter feeds mkdir $TEMPDIR cd $TEMPDIR wget -N --random-wait --output-file=log.txt --input-file=$PARENTPATH/lists/twitrss_$1.txt #count "400" errors (ie, server refusals) in log.txt, report to master log file echo "$1 $DATESTAMP" >> $PARENTPATH/masterlog.txt grep 'ERROR 400\: Bad Request' log.txt | wc -l >> $PARENTPATH/masterlog.txt #(re)create simple list of files sed -e 's/http:\/\/twitter.com\/statuses\/user_timeline\///' $PARENTPATH/lists/twitrss_$1.txt > $PARENTPATH/lists/twitrssfilesonly_$1.txt for i in $(cat $PARENTPATH/lists/twitrssfilesonly_$1.txt); do perl $PARENTPATH/twitterparse.pl $i; done for i in $(cat $PARENTPATH/lists/twitrssfilesonly_$1.txt); do cat $TEMPDIR/$i.txt >> $PARENTPATH/$1/cumulativefile_$1.txt ; done #delete the individual feeds (keep only "cumulativefile") to save disk space #alternately, could save as tgz rm -r $TEMPDIR #delete duplicate lines sort $PARENTPATH/$1/cumulativefile_$1.txt | uniq > $PARENTPATH/$1/tmp mv $PARENTPATH/$1/tmp $PARENTPATH/$1/cumulativefile_$1.txt #have a nice day

Most of the cleaning is accomplished by twitterparse.pl. It’s unnecessary to cron this script as it’s called by twitterscrape_daily.sh, but it should be in the same directory.

#!/usr/bin/perl

#twitterparse.pl by ghr

#this script cleans RSS files scraped by WGET

#usually run automatically by twitterscrape_daily.sh

use warnings; use strict;

die "usage: twitter_rss_parse.pl <foo.rss>\n" unless @ARGV==1;

my $rawdata = shift(@ARGV);

my $channelheader = 1 ; #flag for in the <channel> (as opposed to <item>)

my $feed = "" ; #name of the twitter feed <channel><title>

my $title = "" ; #item title/content <item><title> (or <item><description> for Twitter)

my $date = "" ; #item date <item><pubDate>

my $source = "" ; #item source (aka, iphone, blackberry, web, etc) <item><twitter:source>

print "starting to read $rawdata\n";

open(IN, "<$rawdata") or die "error opening $rawdata for reading\n";

open(OUT, ">$rawdata.txt") or die "error creating $rawdata.txt\n";

while (<IN>) {

#find if in <item> (ie, have left <channel>)

if($_ =~ m/^\s+\<item\>/) {

$channelheader = 0;

}

#find title of channel

if($channelheader==1) {

if($_ =~ m/\<title\>/) {

$feed = $_;

$feed =~ s/\s+\<title\>(.+)\<\/title\>\n/$1/; #drop tags and EOL

print "feed identifed as: $feed\n";

}

}

#find all <item> info and write out at </item>

if($channelheader==0) {

#note, cannot handle interal LF characters.

#doesn't crash but leaves in leading tag and

#only an issue for title/description

#ignore for now

if($_ =~ m/\<title\>/) {

$title = $_;

$title =~ s/\015?\012?//g; #manual chomp, global to allow internal \n

$title =~ s/\s+\<title\>//; #drop leading tag

$title =~ s/\<\/title\>//; #drop closing tag

}

if($_ =~ m/\<pubDate\>/) {

$date = $_;

$date =~ s/\s+\<pubDate\>(.+)\<\/pubDate\>\n/$1/; #drop tags and EOL

}

if($_ =~ m/\<twitter\:source\>/) {

$source = $_;

$source =~ s/\s+\<twitter\:source\>(.+)\<\/twitter\:source\>\n/$1/; #drop tags and CRLF

$source =~ s/<a href="http:\/\/twitter\.com\/" rel="nofollow">(.+)<\/a>/$1/; #cleanup long sources

}

#when item close tag is reached, write out then clear memory

if($_ =~ m/\<\/item\>/) {

print OUT "\"$feed\"\t\"$date\"\t\"$title\"\t\"$source\"\n";

#clear memory (for <item> fields)

$title = "" ;

$date = "" ;

$source = "" ;

}

}

}

close IN;

close OUT;

print "done writing $rawdata.txt \n";

*In principle you could use Thunderbird instead of RSSOwl, but its RSS has some annoying bugs. If you do use Thunderbird, you’ll need to use this plug-in to export the mailboxes. Also note that by default, RSSOwl only keeps the 200 most recent posts. You want to disable this setting, either globally in the “preferences” or specifically in the “properties” of the particular feed.

Growl in R and Stata

| Gabriel |

Growl is a system notification tool for Mac that lets applications, the system itself, or hardware display brief notification, usually in the top-right corner. The translucent floating look reminds me of the more recent versions of KDE.

Anyway, one of the things it’s good for is letting programs run in the background and let you know when something noteworthy has happened. Of course, a large statistics batch would qualify. I was running a 10 minute job in the R package igraph and got tired of checking to see when it was done so I found this tip. In a nutshell, it says to download Growl, including the command-line tool GrowlNotify from the “Extras” folder, then create this R function.

growl <- function(m = 'Hello world')

system(paste('growlnotify -a R -m \'',m,'\' -t \'R is calling\'; echo \'\a\' ', sep=''))

Since the R function “system()” is equivalent to the Stata command “shell,” I realized this would work in Stata as well and so I wrote this ado file.* You call it just by typing “growl”. It takes as an (optional) argument whatever you’d like to see displayed in Growl, such as “Done with first analysis” or “All finished” but by default displays “Stata needs attention.” Note that while Stata already bounces in the dock when it completes a script or hits an error, you can also have Growl appear at various points during the run of a script.

I’ve only tested it with StataMP, but I’d appreciate it if people who use Growl and other versions of Stata would post their results in the comments. If it proves robust I’ll submit it to SSC.

Here’s the Stata code. The most important line is #32 and if you were doing it by hand you’d do it as a one-liner but the rest of this stuff allows argument passing and compatibility with the different versions (small, IC, SE, MP) of Stata:

*1.0 GHR Jan 19, 2011

capture program drop growl

program define growl

version 10

set more off

syntax [anything]

if "`anything'"=="" {

local message "Stata needs attention"

}

else {

local message "`anything'"

}

local appversion "Stata"

if "`c(flavor)'"=="Small" {

local appversion "smStata"

}

else {

if `c(SE)'==1 {

if `c(MP)'==1 {

local appversion "StataMP"

}

else {

local appversion "StataSE"

}

}

else {

local appversion "Stata"

}

}

shell growlnotify -a `appversion' -m \ "`message'" \ `appversion' \

end

*Most programming/scripting languages and a few GUI applications have a similar system call and you can likewise get Growl to work with them. For instance, Perl also has a function called system(). In shell scripting of course you can just use the “growlnotify” command directly.

Dropbox

| Gabriel |

I’ve started playing with Dropbox lately. This is useful both as backup and as a user-friendly (but limited capacity) alternative to RCS on the Subversion model. The way it works is that Dropbox creates a folder called ~/Dropbox, then syncs everything in that folder to the cloud. The trouble is how I integrate it into my existing file system. Here are a few options:

- Hard links. That is, basically have the same file be located in two places at once. This seems ideal but is actually a bad idea since hard links can be broken pretty easily and you end up with two files rather than one file in two places. The only thing I’m comfortable using hard links for is incremental backup (as in my time-stamped scraping workflow), but I try not to use them in an active file system where all sorts of programs are doing God knows what to the file system.

- Symbolic links to ~/Dropbox. That is, keep my files where they were and put a symbolic link in ~/Dropbox. This is really easy to script and generally low hassle. The downside to this is that it can screwed up if you’re using Dropbox to sync between two computers.

- Symbolic links from ~/Dropbox. That is, keep the file in ~/Dropbox and put a symbolic link in the original location. The appeal is that it solves the problem noted above. The problem is that this kills any relative paths from the document. For instance, my book is a Lyx file that links to rather than contains the graphs. The graphs are specified as relative paths and so Latex can’t find them if I keep the file itself in ~/Dropbox and put a symlink in ~/Documents/book. Furthermore, in any kind of file browsing at the original location, the file type shows up as a symbolic link rather than a “Lyx Document,” “Stata do-file,” or whatever. I can still tell what it is by the icon and extension, but I can’t sort by file type.

- Two copies, synced with Unison. Instead of linking, keep a copy of the file in both the original location and in ~/Dropbox. Then cron Unison to ensure that they are always current. The problem with this is that I’m using Dropbox in part to avoid having to learn how to script Unison.

Of these, #4 (Unison) strikes me as the most elegant but for my needs #2 (symlinks in ~/Dropbox) is the most practical and I’m too busy right now to really get my geek on. I’m almost always using my MacBook and on those rare occasions when I’m using another computer, I’ll just treat the main directories on the Dropbox cloud as read-only and then manually sync the updates to my Macbook’s original file system (and by extension, ~/Dropbox and the Dropbox cloud). If I ever get into a situation of regularly using multiple computers, I’ll probably do the Unison thing. Alternately, I could just pay $20/month to buy such a massive Dropbox account that I could basically treat it as my ~/Documents folder, which would eliminate the issue altogether.

Anyways, for now, I’m just keeping everything where it is and adding symbolic links to ~/Dropbox. To make this really easy, I used Automator to save this script as a right-clickable Finder (or PathFinder) service so I can easily send a symbolic link of a file to my ~/Dropbox directory.

#bin/bash for f in "$@" do FILENAME=`basename $f` DIRPATH=`dirname $f | sed 's/ *//g' | sed 's/\//\./g' | sed 's/^.//'` ln -s "$f" ~/Dropbox/$DIRPATH.$FILENAME done

This script creates a symbolic link inside ~/Dropbox. The symbolic link is named for the full path of the linked file, but with spaces suppressed and with all “/” (except the leading one) turned into “.” . For instance, if I apply the service to a file called:

/Users/rossman/Documents/book/book.lyx

it creates a symbolic link to this file called

/Users/rossman/Dropbox/Users.rossman.Documents.book.book.lyx

It also works on directories, eg, this:

/Users/rossman/Documents/miscservice/peerreview

gets this link:

/Users/rossman/Dropbox/Users.rossman.Documents.miscservice.peerreview

Scraping for Event History

| Gabriel |

As I’ve previously mentioned, there’s a lot of great data out there but much of it is ephemeral so if you’re interested in change (which given our obsession with event history, many sociologists are) you’ve got to know how to grab it. I provided a script (using cron and curl) for grabbing specific pages and timestamping them but this doesn’t scale up very well to getting entire sites, both because you need to specify each specific URL and because it saves a complete copy each time rather than the diff. I’ve recently developed another approach that relies on wget and rsync and is much better for scaling up to a more ambitious scraping project.

Note that because of subtle differences between dialects of Unix, I’m assuming Linux for the data collection but Mac for the data cleaning.* Using one or the other for everything requires some adjustments. Also note that because you’ll want to “cron” this, I don’t recommend running it on your regular desktop computer unless you leave it on all night. If you don’t have server space (or an old computer on which you can install Linux and then treat as a server), your cheapest option is probably to run it on a wall wart computer for about $100 (plus hard drive).

Wget is similar to curl in that it’s a tool for downloading internet content but it has several useful features, some of which aren’t available in curl. First, wget can do recursion, which means it will automatically follows links and thus can get an entire site as compared to just a page. Second, it reads links from a text file a bit better than curl. Third, it has a good time-stamping feature where you can tell it to only download new or modified files. Fourth, you can exclude files (e.g., video files) that are huge and you’re unlikely to ever make use of. Put these all together and it means that wget is scalable — it’s very good at getting and updating several websites.

Unfortunately, wget is good at updating, but not at archiving. It assumes that you only want the current version, not the current version and several archival copies. Of course this is exactly what you do need for any kind of event history analysis. That’s where rsync comes in.

Rsync is, as the name implies, a syncing utility. It’s commonly used as a backup tool (both remote and local). However the simplest use for it is just to sync several directories and we’ll be applying it to a directory structure like this:

project/

current/

backup/

t0/

t1/

t2/

logs/

In this set up, wget only ever works on the “current” directory, which it freely updates. That is, whatever is in “current” is a pretty close reflection of the current state of the websites you’re monitoring. The timestamped stuff, which you’ll eventually be using for event history analysis, goes in the “backup” directories. Every time you run wget you then run rsync after it so that next week’s wget run doesn’t throw this week’s wget run down the memory hole.

The first time you do a scrape you basically just copy current/ to backup/t0. However if you were to do this for each scrape it would waste a lot of disk space since you’d have a lot of identical files. This is where incremental backup comes in, which Mac users will know as Time Machine. You can use hard links (similar to aliases or shortcuts) to get rsync to accomplish this.** The net result is that backup/t0 takes the same disk space as current/ but each subsequent “backup” directory takes only about 15% as much space. (A lot of web pages are generated dynamically and so they show up as “recently modified” every time, even if there’s no actual difference with the “old” file.) Note that your disk space requirements get big fast. If a complete scrape is X, then the amount of disk space you need is approximately 2 * X + .15 * X * number of updates. So if your baseline scrape is 100 gigabytes, this works out to a full terabyte after about a year of weekly updates.

Finally, when you’re ready to analyze it, just use mdfind (or grep) to search the backup/ directory (and its subdirectories) for the term whose diffusion you’re trying to track and pipe the results to a text file. Then use a regular expression to parse each line of this query into the timestamp and website components of the file path to see on which dates each website used your query term — exactly the kind of data you need for event history. Furthermore, you can actually read the underlying files to get the qualitative side of it.

So on to the code. The wget part of the script looks like this

DATESTAMP=`date '+%Y%m%d'` cd ~/Documents/project mkdir logs/$DATESTAMP cd current wget -S --output-file=../logs/$DATESTAMP/wget.log --input-file=../links.txt -r --level=3 -R mpg,mpeg,mp4,au,mp3,flv,jpg,gif,swf,wmv,wma,avi,m4v,mov,zip --tries=10 --random-wait --user-agent=""

That’s what it looks like the first time you run it. When you’re just trying to update “current/” you need to change “wget -S” to “wget -N” but aside from that this first part is exactly the same. Also note that if links.txt is long, I suggest you break it into several parts. This will make it easier to rerun only part of a large scrape, for instance if you’re debugging, or there’s a crash, or if you want to run the scrape only at night but it’s too big to completely run in a single night. Likewise it will also allow you to parallelize the scraping.

Now for the rsync part. After your first run of wget, run this code.

cd .. rsync -a current/ backup/baseline/

After your update wget runs, you do this.

cd .. cp -al backup/baseline/ backup/$DATESTAMP/ rsync -av --delete current/ backup/$DATESTAMP/

* The reason to use Linux for data collection is that OS X doesn’t include wget and has an older version of the cp command, though it’s possible to solve both issues by using Fink to install wget and by rewriting cp in Mac/BSD syntax. The reason to use Mac for data analysis is that mdfind is faster (at least once it builds an index) and can read a lot of important binary file formats (like “.doc”) out of the box, whereas grep only likes to read text-based formats (like “.htm”). There are apparently Linux programs (e.g., Beagle) that allow indexed search of many file formats, but I don’t have personal experience with using them as part of a script.

** I adapted this use of hard links and rsync from this tutorial, but note that there are some important differences. He’s interested in a rolling “two weeks ago,” “last week,” “this week” type of thing, whereas I’m interested in absolute dates and don’t want to overwrite them after a few weeks

Hide iTunes Store/Ping

| Gabriel |

As a cultural sociologist who has published research on music as cultural capital, I understand how my successful presentation of self depends on me making y’all believe that I only listen to George Gershwin, John Adams, Hank Williams, the Raveonettes, and Sleater-Kinney, as compared to what I actually listen to 90% of the time, which is none of your fucking business. For this reason, along with generally being a web 2.0 curmudgeon, I’m not exactly excited about iTunes new social networking feature “Ping.” Sure, it’s opt-in and I appreciate that, but I am so actively uninterested in it that I don’t even want to see its icon in the sidebar. Hence I wrote this one-liner that hides it (along with the iTunes store).

defaults write com.apple.iTunes disableMusicStore -bool TRUE

My preferred way of running it is to use Automator to run it as a service in iTunes that takes no input.

Unfortunately it also hides the iTunes Store, which I actually use a couple times a month. To get the store (and unfortunately, Ping) back, either click through an iTunes store link in your web browser or run the command again but with the last word as “FALSE”.

Grep nlogo

| Gabriel |

NetLogo has pretty good documentation but sometimes I’d like to see a bit more example code. Fortunately “.nlogo” files are stored as text, which means you can grep them to find examples of how the function is used in the model library. For instance this command will give you a list of models in the model library that use the function “layout-circle.”

grep -l -r 'layout-circle' "/Applications/NetLogo 4.1/models/Sample Models/"

Note that NetLogo itself only allows one open file at a time which is why I like to have the example code open in a text editor while the program I’m working on is open in NetLogo itself. Personally, I use TextMate for this, in part because I like it in general but also because the Logo bundle works pretty well with NetLogo and features like code-folding are especially useful for NetLogo coding style.

Recent Comments