Posts tagged ‘Stata’

Oscar Appeal

| Gabriel |

This post contains two Stata do-files for constructing the “Oscar appeal” variable at the center of Rossman & Schilke “Close But No Cigar.”

Now These Are the Names, Pt 1

| Gabriel |

There’s a lot of great research on names and I’ve been a big fan of it for years, although it’s hard to commensurate with my own favorite diffusion models since names are a flow whereas the stuff I’m interested in generally concern diffusion across a finite population.

Anyway, I was inspired to play with this data by two things in conversation. The one I’ll discuss today is somebody repeated a story about a girl named “Lah-d,” which is pronounced “La dash da” since “the dash is not silent.”

This appears to be a slight variation on an existing apocryphal story, but it reflects three real social facts that are well documented in the name literature. First, black girls have the most eclectic names of any demographic group, with a high premium put on on creativity and about 30% having unique names. Second, even when their names are unique coinages they still follow systematic rules, as with the characteristic prefix “La” and consonant pair “sh.” Third, these distinctly black names are an object of bewildered mockery (and a basis for exclusion) by others, which is the appeal in retelling this and other urban legends on the same theme.*

To tell if there was any evidence for this story I checked the Social Security data, but the web searchable interface only includes the top 1000 names per year. Thus checking on very rare names requires downloading the raw text files. There’s one file per year, but you can efficiently search all of them from the command line by going to the directory where you unzipped the archive and grepping.

cd ~/Downloads/names grep '^Lah-d' *.txt grep '^Lahd' *.txt

As you can see, this name does not appear anywhere in the data. Case closed? Well, there’s a slight caveat in that for privacy reasons the data only include names that occur at least five times in a given birth year. So while it includes rare names, it misses extremely rare names. For instance, you also get a big fat nothing if you do this search:

grep '^Reihan' *.txt

This despite the fact that I personally know an American named Reihan. (Actually I’ve never asked him to show me a photo ID so I should remain open to the possibility that “Reihan Salam” is just a memorable nom de plume and his birth certificate really says “Jason Miller” or “Brian Davis”).

For names that do meet the minimal threshold though you can use grep as the basis for a quick and dirty time series. To automate this I wrote a little Stata script to do this called grepnames. To call it, you give it two arguments, the (case-sensitive) name you’re looking for and the directory where you put the name files. It gives you back a time-series for how many births had that name.

capture program drop grepnames program define grepnames local name "`1'" local directory "`2'" tempfile namequery shell grep -r '^`name'' "`directory'" > `namequery' insheet using `namequery', clear gen year=real(regexs(1)) if regexm(v1,"`directory'yob([12][0-9][0-9][0-9])\.txt") gen name=regexs(1) if regexm(v1,"`directory'yob[12][0-9][0-9][0-9]\.txt:(.+)") keep if name=="`name'" ren v3 frequency ren v2 sex fillin sex year recode frequency .=0 sort year sex twoway (line frequency year if sex=="M") (line frequency year if sex=="F"), legend(order(1 "Male" 2 "Female")) title(`"Time Series for "`name'" by Birth Cohort"') end

For instance:

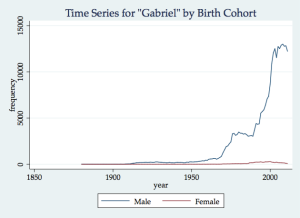

grepnames Gabriel "/Users/rossman/Documents/codeandculture/names/"

Note that these numbers are not scaled for the size of the cohorts, either in reality or as observed by the Social Security administration. (Their data is noticeably worse for cohorts prior to about 1920). Still, it’s pretty obvious that my first name has grown more popular over time.

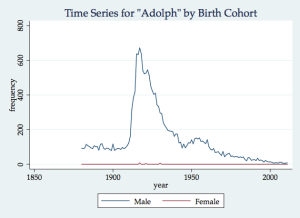

We can also replicate a classic example from Lieberson of a name that became less popular over time, for rather obvious reasons.

grepnames Adolph "/Users/rossman/Documents/codeandculture/names/"

Next time, how diverse are names over time with thoughts on entropy indices.

(Also see Jay’s thoughts on names, as well as taking inspiration from my book to apply Bass models to film box office).

* Yes, I know that one of those stories is true but the interesting thing is that people like to retell it (and do so with mocking commentary), not that the underlying incident is true. It is also true that yesterday I had eggs and coffee for breakfast, but nobody is likely to forward an e-mail to their friends repeating that particular banal but accurate nugget.

Using filefilter to make insheet happy

| Gabriel |

As someone who mostly works with text files with a lot of strings, I often run into trouble with the Stata insheet command being extremely finicky about how it takes data. Frequently it ends up throwing out half the rows because at some point in the file there’s a stray character and Stata not only throws out that row but everything thereafter. In my recent work on IMDb I’ve gotten into the habit of first reading text files into Excel, then having Stata read the xlsx files. This is tolerable if you’re dealing with a relatively small number of files that you’re only importing once, but it won’t scale to repeated imports or a large number of files.

More recently, I’ve been dealing with data collected with the R library twitteR. Since tweets sometimes contain literal quotes, twitteR escapes them with a backslash. However Stata does not recognize this convention and it chokes on this when the quote characters are unbalanced. I realized this the other night when I was trying to test for left-censorship and fixed it using the batch find/replace in TextWrangler. Of course this is not scriptable and so I was contemplating taking the plunge into Perl when the Modeled Behavior hive mind suggested the Stata command filefilter. Using this command I can replace the escaped literal quotes (which chokes Stata’s insheet) with literal apostrophes (which Stata’s insheet can handle).

filefilter foo.txt foo2.txt, from(\BS\Q) to(\RQ)

Problem solved, natively in Stata, and I have about 25% more observations. Thanks guys.

Control for x

| Gabriel |

An extremely common estimation strategy, which Roland Fryer calls “name that residual,” is to throw controls at an effect then say whatever effect remains net of the controls is the effect. Typically as you introduce controls the effect goes down, but not all the way down to zero. Here’s an example using simulated data where we do a regression of y (continuous) on x (dummy) with and without control (continuous and negatively associated with x).

--------------------------------------------

(1) (2)

--------------------------------------------

x -0.474*** -0.257***

(0.073) (0.065)

control 0.492***

(0.023)

_cons 0.577*** 0.319***

(0.054) (0.048)

--------------------------------------------

N 1500 1500

--------------------------------------------

So as is typical, we see that even if you allow that x=1 tends to be associated with low values for control, you still see an x penalty. However this is a spurious finding since by assumption of the simulation there is no actual net effect of x on y, but only an effect mediated through the control.

This raises the question of what it means to have controlled for something. Typically we’re not really controlling for something perfectly, but only for a single part of a bundle of related concepts (or if you prefer, a noisy indicator of a latent variable). For instance when we say we’ve controlled for “human capital” the model specification might only really have self-reported highest degree attained. This leaves out both other aspects of formal education (eg, GPA, major, institution quality) and other forms of HC (eg, g and time preference). These related concepts will be correlated with the observed form of the control, but not perfectly. Indeed it can even work if we don’t have “omitted variable bias” but just measurement error on a single variable, as is the assumption of this simulation.

To get back to the simulation, let’s appreciate that the “control” is really the control as observed. If we could perfectly specify the control variable, the main effect might go down all the way to zero. In fact in the simulation that’s exactly what happens.

------------------------------------------------------------

(1) (2) (3)

------------------------------------------------------------

x -0.474*** -0.257*** -0.005

(0.073) (0.065) (0.053)

control 0.492***

(0.023)

control_good 0.980***

(0.025)

_cons 0.577*** 0.319*** 0.538***

(0.054) (0.048) (0.038)

------------------------------------------------------------

N 1500 1500 1500

------------------------------------------------------------

That is, when we specify the control with error much of the x penalty persists. However when we specify the control without error the net effect of x disappears entirely. Unfortunately in reality we don’t have the option of measuring something perfectly and so all we can do is be cautious about whether a better specification would further cram down the main effect we’re trying to measure.

Here’s the code

clear set obs 1500 gen x=round(runiform()) gen control_good=rnormal(.05,1) - x/2 gen y=control_good+rnormal(0.5,1) gen control=control_good+rnormal(0.5,1) eststo clear eststo: reg y x eststo: reg y x control esttab, se b(3) se(3) nodepvars nomtitles eststo: reg y x control_good esttab, se b(3) se(3) nodepvars nomtitles *have a nice day

Recursively building graph commands

| Gabriel |

I really like multi-line graphs and scatterplots where the marker color/style reflects categories. Such graphs are both more compact than just having multiple graphs and they make it easier to compare different things. The way you do this is with “twoway,” a lot of parentheses, and the “if” condition. For example:

twoway (kdensity x if year==1985) (kdensity x if year==1990) (kdensity x if year==1995) (kdensity x if year==2000) (kdensity x if year==2005), legend(order(1 "1985" 2 "1990" 3 "1995" 4 "2000" 5 "2005"))

Unfortunately such graphs can be difficult to script. This is especially so for the legends, which by default show the variable name rather than the selection criteria. I handle this by recursively looping over a local, which in the case of the legend involves embedded quote marks.

capture program drop multigraph

program define multigraph

local var `1'

local interval `2'

local command ""

local legend ""

local legendtick=1

forvalues i=1985(`interval')2005 {

local command "`command' (kdensity `var' if year==`i')"

local legend `legend' `legendtick' `" `i' "'

*"

local legendtick=`legendtick'+1

}

disp "twoway ""`command'" ", legend:" "`legend'"

twoway `command' , legend(order(`legend'))

end

To replicate the hard-coded command above, you’d call it like this:

multigraph x 5

Importnew.ado (requires R)

| Gabriel |

After hearing from two friends in a single day who are still on Stata 10 that they were having trouble opening Stata 12 .dta files, I rewrote my importspss.ado script to translate Stata files into an older format, by default Stata 9.

I’ve tested this with Stata 12 and in theory it should work with older versions, but please post positive or negative results in the comments. Remember that you need to have R installed. Anyway, I would recommend handling the backwards compatibility issue on the sender’s side with the native “saveold” command, but this should work in a pinch if for some reason you can’t impose on the sender to fix it and you need to fix it on the recipient’s end. Be especially careful if the dataset includes formats that Stata’s been updating a lot lately (e.g., the date formats).

The syntax is just:

importnew foo.dta

Here’s the code:

*importnew.ado

*by GHR 10/7/2011

*this script uses R to make new Stata files backwards compatible

* that is, use it when your collaborator forgot to use "saveold"

*use great caution if you are using data formats introduced in recent versions

* eg, %tb

*DEPENDENCY: R and library(foreign)

*if R exists but is not in PATH, change the reference to "R" in line 29 to be the specific location

capture program drop importnew

program define importnew

set more off

local future `1'

local version=9 /* version number for your copy of Stata */

local obsolete=round(runiform()*1000)

local sourcefile=round(runiform()*1000)

capture file close rsource

file open rsource using `sourcefile'.R, write text replace

file write rsource "library(foreign)" _n

file write rsource `"directory <- "`c(pwd)'" "' _n

file write rsource `"future <- "`future'" "' _n

file write rsource `"obsolete <- paste("`obsolete'",".dta",sep="") "' _n

file write rsource "setwd(directory)" _n

file write rsource `"data <- read.dta(future, convert.factors=TRUE, missing.type=FALSE)"' _n

file write rsource `"write.dta(data, file=obsolete, version=`version')"' _n

file close rsource

shell R --vanilla <`sourcefile'.R

erase `sourcefile'.R

use `obsolete'.dta, clear

erase `obsolete'.dta

end

*have a nice day

So Long Arial

| Gabriel |

Now that I’ve been looking into setting my graphs in a serif font instead of the default Arial, I find that changing fonts in Stata graphs is surprisingly difficult. It’s not that it’s intrinsically difficult, just confusing to learn because Stata’s handling of fonts breaks with the general Stata convention of specifying options as part of a command and is instead more similar to the Gnuplot style of changing device preferences then executing a command targeting the device. One implication of this is that there’s no option to change the graph font in the graph GUI interface (which is how I usually learn new bits of command-line syntax).

Another issue is that graphs aren’t WYSIWYG. Rather the interactive display graph can look different from the graph saved to disk and that in turn can be inconsistent depending on what file format you use. To avoid confusion I just set everything at once, like this:

local graphfont "Palatino" graph set eps fontface `graphfont' graph set eps fontfaceserif `graphfont' graph set eps /*echo back preferences*/ graph set window fontface `graphfont' graph set window fontfaceserif `graphfont' graph set window /*echo back preferences*/

One of the oddities is that there is no set of PDF options. Rather (at least on a Mac) you control the PDF device as part of the display device (“window”). My understanding is that Stata for Mac relies on the low level OS X PDF support for creating PDFs, and this would explain why it considers PDF to be part of the display device rather than one of the file type devices (as well as why it won’t make PDFs if you suppress screen rendering, why Stata for Mac got PDF support earlier than the other platforms, and why the PDFs looked like this until they fixed it in 11.2 and 12). Note that this means that your PDF will use the EPS fonts if you use my graphexportpdf.ado script and the display fonts if you use the base “graph export foo.pdf” syntax.

I haven’t checked, but I wouldn’t be surprised if the PDF preferences in Stata for Windows are controlled by the EPS preferences rather than the display preferences. In any case, I recommend just setting all devices the same so it won’t matter.

(Thanks to Eric Booth, whose StataList post, helped me figure this out).

Adding elements to graphs as a slideshow

| Gabriel |

One of the tricks to a successful presentation is to limit what your audience sees so they don’t get ahead of you and also to preserve a general sense of timing and flow. This helps keep the audience’s attention and also is good for focusing expectations in such a way that the next bit is counter-intuitive and therefore interesting. Nothing is so boring as sitting in a talk and seeing ten bullet points and realizing that the speaker is only on bullet number three.

Similarly if you’re using graphs in a talk (which you should as much as possible since they read better than tables), you may only want to reveal part of a graph as you talk about it, then reveal the next bit when you’re ready. The most obvious way to do this is to just crop the graph or cover it with boxes that match the background or something. Unfortunately that’s ugly and clunky and doesn’t work if the graph elements are tightly commingled. Another way to do it is to generate two graphs, one of which has the elements and the other of which doesn’t. The problem with this is that the graphs don’t match up properly. For instance, if you have a line graph and you keep adding lines to it, the legend will first appear and then grow larger, crowding out the graph itself.

Ideally, what you want is a set of graphs that are completely identical except some elements are missing in one version which are added in the other version. You can then line the graphs up, talk about the first set of elements, and then do a smooth transition to the version with the full set of elements. Here’s an example from the talk I gave yesterday. In order to explain crossover I first show the song’s native formats then dissolve to also show the crossover formats.

Here’s how I did it. The basic trick is that Stata can create transparent graph elements by setting the color to “none”. You do the exact same graph multiple times, you just set colors to be transparent when you want to conceal elements. That is, the code in lines 10–14 is identical to that in lines 17–21 except that lines 13 and 14 set line color to “none” instead of Stata’s standard s2color scheme.

use final_f, clear

keep if artist=="SARA BAREILLES"

drop if format=="All" | format=="Other"

sum date

local maxdate=`r(max)'

local mindate=`r(min)'

local interval=(`maxdate'-`mindate')/10

local interval=round(`interval',7)

twoway (line Nt_inc_p date if format=="AAA_Rock", lwidth(thick) lcolor(navy)) /*

*/ (line Nt_inc_p date if format=="Hot_AC", lwidth(thick) lcolor(maroon)) /*

*/ (line Nt_inc_p date if format=="Top_40", lwidth(thick) lcolor(none)) /*

*/ (line Nt_inc_p date if format=="Mainstream_AC", lwidth(thick) lcolor(none)) /*

*/ , xtitle("") xmtick(`mindate'(7)`maxdate') xlabel(`mindate'(`interval')`maxdate', labsize(vsmall) angle(forty_five) format(%tdMon_dd,_CCYY)) legend(order (1 "AAA Rock" 2 "Hot AC" 3 "Top 40" 4 "Mainstream AC")) graphregion(fcolor(white))

graph export $images/sarabareilles_lovesong_1.pdf, replace

twoway (line Nt_inc_p date if format=="AAA_Rock", lwidth(thick) lcolor(navy)) /*

*/ (line Nt_inc_p date if format=="Hot_AC", lwidth(thick) lcolor(maroon)) /*

*/ (line Nt_inc_p date if format=="Top_40", lwidth(thick) lcolor(dkorange)) /*

*/ (line Nt_inc_p date if format=="Mainstream_AC", lwidth(thick) lcolor(forest_green)) /*

*/ , xtitle("") xmtick(`mindate'(7)`maxdate') xlabel(`mindate'(`interval')`maxdate', labsize(vsmall) angle(forty_five) format(%tdMon_dd,_CCYY)) legend(order (1 "AAA Rock" 2 "Hot AC" 3 "Top 40" 4 "Mainstream AC")) graphregion(fcolor(white))

graph export $images/sarabareilles_lovesong_2.pdf, replace

Executing do-files from text editors

| Gabriel |

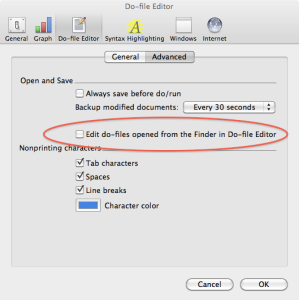

Stata now defaults to opening a do-file in the integrated do-file editor rather than just running it. The integrated do-file editor is now pretty good, but I’m a creature of habit and I prefer to use an external text editor (usually TextMate) then pipe to Stata. The current default behavior makes this somewhat inconvenient.

Fortunately, you can change this pretty easily in the preferences. Open Stata’s preferences, go to the “Do-File” tab and then the “advanced” sub-tab. Now uncheck the box that says “Edit do-files opened from the Finder in Do-file Editor.” Even though it says “from the Finder” this also applies to do-files launched pretty much any way you can think of: after-market file managers, text editors, etc.

Alternately, you could rewrite your text editor’s Stata support to use Stata console, but that’s probably overkill.

Misc Links

| Gabriel |

- Useful detailed overview of Lion. The user interface stuff doesn’t interest me nearly as much as the tight integration of version control and “resume.” Also, worth checking if your apps are compatible. (Stata and Lyx are supposed to work fine. TextMate is supposed to run OK with some minor bugs. No word on R. Fink doesn’t work yet). It sounds good but I’m once again sitting it out for a few months until the compatibility bugs get worked out. Also, as with Snow Leopard many of the features won’t really do anything until developers implement them in their applications.

- I absolutely loved the NPR Planet Money story on the making of Rihanna’s “Man Down.” (Not so fond of the song itself, which reminds me of Bing Crosby and David Bowie singing “Little Drummer Boy” in matching cardigans). If you have any interest at all in production of culture read the blog post and listen to the long form podcast (the ATC version linked from the blog post is the short version).

- Good explanation of e, which comes up surprisingly often in sociology (logit regression, diffusion models, etc.). I like this a lot as in my own pedagogy I really try to emphasize the intuitive meaning of mathematical concepts rather than just the plug and chug formulae on the one hand or the proofs on the other.

- People are using “bimbots” to scrape Facebook. And to think that I have ethical misgivings about forging a user-agent string so wget looks like Firefox.

Recent Comments